Introduction

When we started Autoblocks, we knew we needed to design the platform for quick and cost-effective scaling. This blog post will delve into the architectural decisions that enabled us to seamlessly scale from 0 to over 1 million events daily.

Guiding Principles

From the beginning, our platform was crafted with four core principles in mind:

- Hands-Off Scalability

- We are growing quickly, so the system needs to be able to automatically adapt to an ever-growing workload without intervention. We don’t have time to tune the system continuously.

- Millisecond-Level Latency

- When serving a global user base, we need to be able to ingest events from anywhere within milliseconds.

- Bulletproof Reliability

- Let's face it—if you're missing events, you're flying blind. Our customers rely on us for comprehensive visibility into their LLM products, so we need to deliver reliable ingestion.

Overview

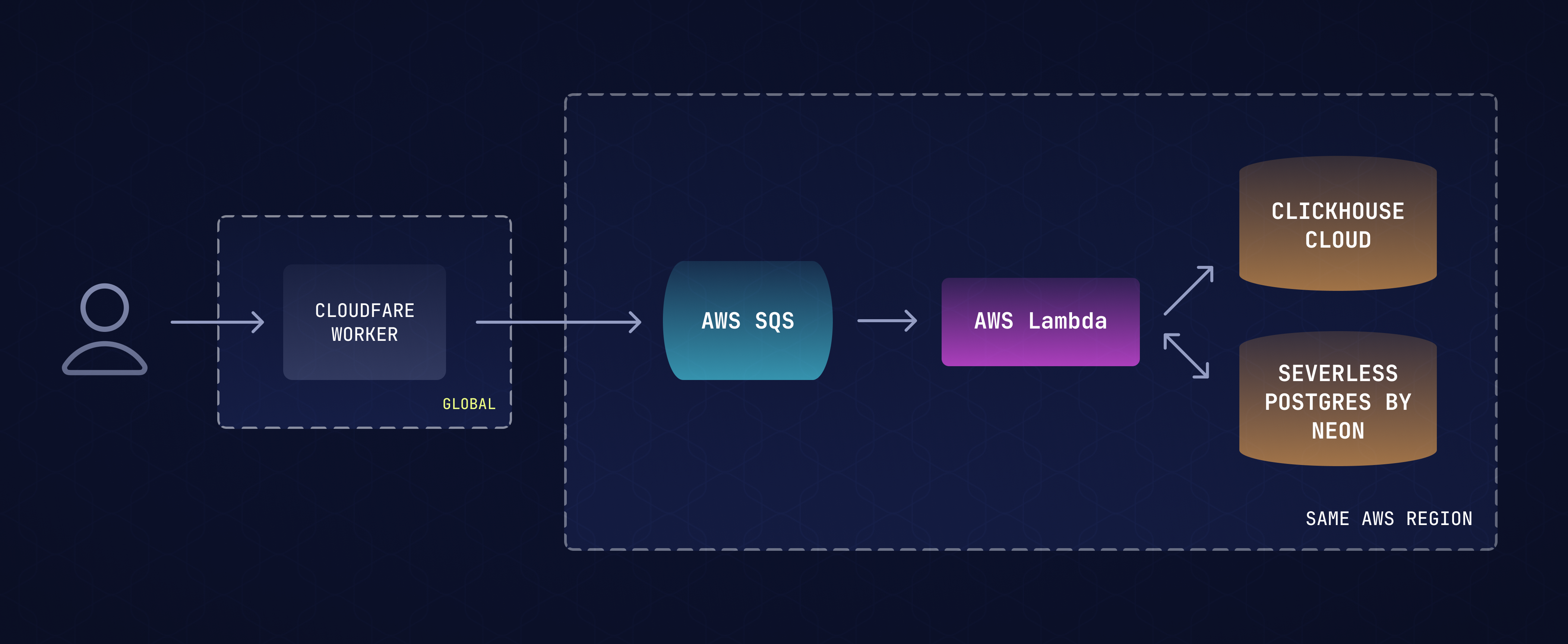

Keeping the guiding principles in mind, here’s how we built our event ingestion pipeline to allow us to reliably ingest millions of events with extremely low latency worldwide.

The Global Entry Point: Cloudflare Workers

We host our Ingestion API on Cloudflare Workers, keeping us close to our users worldwide. The workers contain the bare essentials to acknowledge requests and place them in a queue for processing, minimizing any room for bugs.

The Glue: Amazon Simple Queue Service (SQS)

After the acknowledgment, the Cloudflare Worker immediately pushes events into an SQS queue. SQS is the robust middleman that holds the events until they're ready for processing. Its built-in durability and reliability features, like automatic retry and a dead letter queue, ensure no event gets left behind. This aligns perfectly with our need for a high-reliability system.

The Workhorse: AWS Lambda

At the other end of our queue, we utilize AWS Lambda to consume and process events in batches. Lambda seamlessly scales up or down depending on how many events are in the queue. We did minor tuning to our batch sizes and lambda runtime, but overall, it’s very hands-off.

The Analytics Engine: ClickHouse Cloud

For event storage, ClickHouse was a no-brainer. It's optimized for high-speed, high-volume scenarios. With ClickHouse Cloud, we don’t need to worry about the low-level details of managing the cluster. ClickHouse allows us to deliver valuable insights over millions of events in virtually real-time within our web app.

The Transactional Cornerstone: Serverless Postgres by Neon

For transactional data, it’s hard to beat Postgres. Neon’s database branching gives us confidence when we test schema changes, while their separation of compute and storage allows for seamless scaling.

Key Decisions

Entrypoint

Our entry point on Cloudflare Workers is intentionally lightweight. Its sole purpose is to accept and place events onto the queue as quickly as possible. We do minimal verification of the event payload and ingestion key before placing it in the queue and do not interact with any databases. This ensures that even if our database is experiencing downtime, we can still ingest events into our platform. Instead of dropping events, events would be slightly delayed from appearing in the web application.

The tradeoff is that it may not always be apparent to users if they are using an invalid ingestion key or event payload format. They will receive a 202 response, but the event will never appear. We decided this tradeoff was worth it, considering how important it is that we can always ingest events.

Batch Processing

ClickHouse is optimized for inserting rows in batches. We have to maintain a delicate balance of time to insertion (when the event will appear in the web application), cost, and performance. We utilize Lambda’s built-in batching mechanism and ClickHouse Cloud’s async insert functionality to keep our average inserts per second around 1 per ClickHouse best practices.

It took some experimentation to find the right balance of batch size and time to wait for a full batch to optimize for our goals. We also need to handle partial batch failures so we don’t retry an entire batch if there is an issue with only a few events. Fortunately, AWS makes this straightforward with their partial batch responses.

Deduplication

We utilize an SQS standard queue for maximum throughput, which means messages will be sent at least once but could be delivered multiple times. To ensure our ingestion pipeline is idempotent, we use the ReplacingMergeTree table type from ClickHouse to dedupe events. An important note here is that it will de-dupe based on the table's primary key, so you will want to ensure those columns will always be the same. For us, that is the organization ID, timestamp, trace ID, and event ID, all of which are set in the Cloudflare Worker to ensure they remain the same if the SQS message is consumed more than once.

A tradeoff with this approach is there is a small chance duplicate events could be shown since ClickHouse will dedupe in the background. We decided this tradeoff was acceptable since it allows us to maintain a high level of throughput in our ingestion pipeline. One alternative would be utilizing a FIFO SQS queue, which comes with performance and cost tradeoffs.

Summary

And that's the Autoblocks ingestion pipeline in a nutshell. We keep our guiding principles at the forefront when making decisions and rely heavily on serverless infrastructure so we can quickly iterate while scaling up quickly. We're well on our way to the 10 million events a day mark, so stay tuned for more insights as we continue to scale up.