Since our launch, Autoblocks has enabled companies of all shapes and sizes to accelerate their GenAI product development. We take great pride in equipping product teams with the tools they need to deliver delightful and useful AI experiences. Infrastructure isn’t glamorous, but it’s not lost on us that great tooling is required to ensure AI is a positive force in the world.

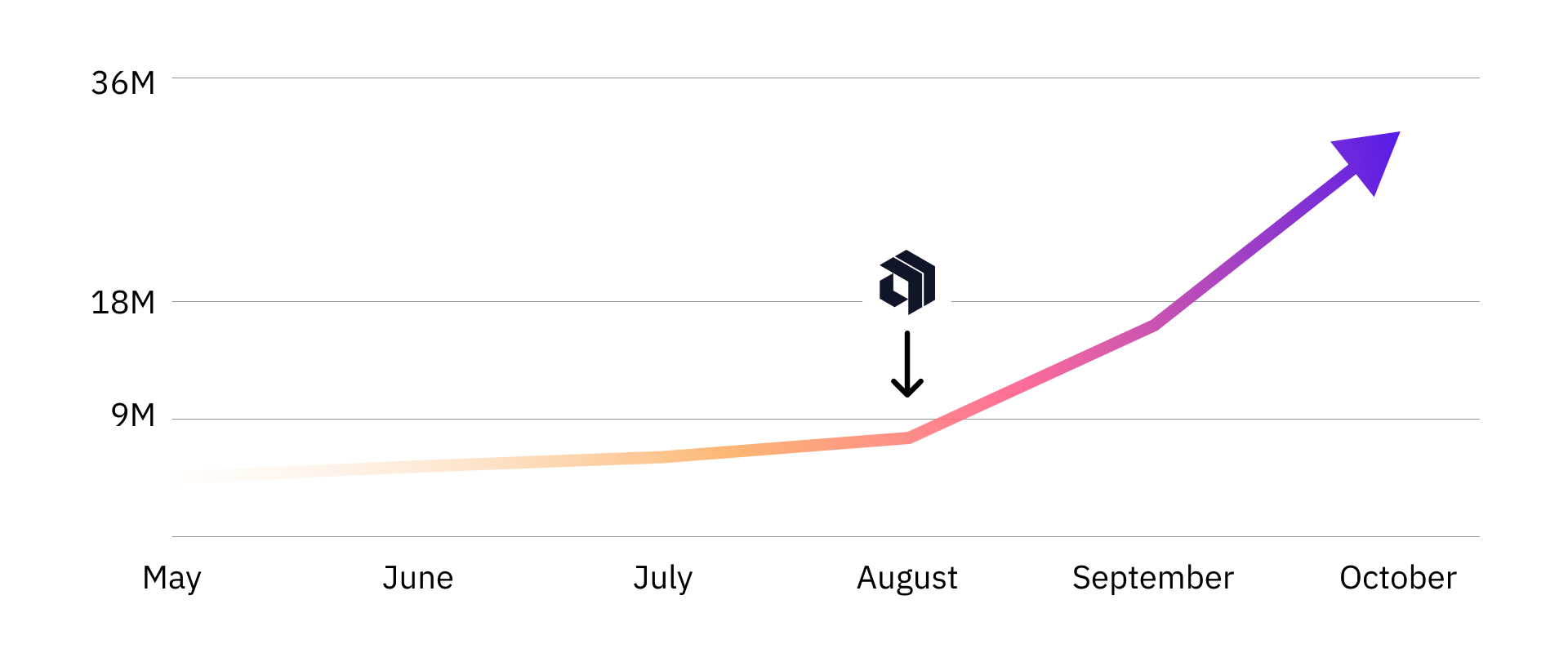

Every day, our users are sending millions of events to Autoblocks, and that number is rapidly climbing. We have served as a secret weapon for companies like Gamma, enabling them to fuel their exponential growth by continuously refining their AI products. Or Clickhouse, who leveraged Autoblocks to ship their GenAI features with exceptional speed to market.

Caption: Gamma's impressive growth curve post-Autoblocks integration.

Autoblocks was born with a clear North Star: enable rapid and flexible development of GenAI products. Since our launch, we've rolled out various new features and enhancements, each designed to increasingly streamline this process.

Introducing Autoblocks 2.0: The GenAI Product Platform

Autoblocks 2.0 is a GenAI product platform packed with LLMOps tools that have become indispensable for modern AI product teams and businesses.

Our latest release enriches the platform with:

- Prompt Playground: A sandbox for crafting and testing prompts with immediate feedback.

- Full-Pipeline Replays: Run inputs through your pipeline to simulate outcomes (and uncover opportunities for improvement).

- Prompt Management: Organize and optimize your prompts to make sure they’re producing the best user outcomes.

- Continuous Evaluations: Constantly assess your GenAI products in production and derive insights for informed product improvements.

But these new features only tell part of the story.

Product teams get access to this tooling in a unified, collaborative workspace that lets them iterate with unprecedented speed and efficiency. Autoblocks’ full-stack offering ensures you have all the context you need, without any dependencies that slow you down.

Cross-functional collaboration made seamless.

In this day and age, speed is of the essence. Companies are racing to reimagine their “jobs to be done,” with GenAI in the picture. Teams that don’t address the unique challenges of building with AI fall behind competitors that do.

In turn, tools like Autoblocks become a necessary companion for getting out of the quicksand, and race towards innovative solutions. Rather than switching between a prompt playground, logging tooling, and CSV files containing input examples, teams can unify an otherwise fragmented workflow with the help of Autoblocks.

The Autoblocks environment is tailored to the unique demands of GenAI product development:

- Enhanced Cross-Functional Collaboration: Building great GenAI products requires input from a diverse set of stakeholders — from product managers to UX designers, developers, and domain experts. Autoblocks fosters seamless collaboration, ensuring miscommunication and bottlenecks don’t slow you down.

- Efficient Context Switching: With Autoblocks, the need to juggle multiple tools vanishes. Teams can use the tools they need, when they need them. Paired with increased collaboration, our unified home for GenAI product development supercharges your iteration cycle.

With Autoblocks, product teams can:

- Share links to traces and test outputs effortlessly,

- Collaboratively curate datasets using real-time or static data, and

- Co-manage prompts, driving consistent and high-quality product experience.

Proxyless by default. No provider dependencies.

Unlike other LLM Ops platforms, Autoblocks is inherently proxyless.

This means you can integrate directly with GenAI API providers without the hassle of setting up a proxy. This keeps implementation simple and ensures your product won’t crumble after the next OpenAI Dev Day.

While proxies have their perks, like better control over the data flow between an API provider and the user, they lock you into an inflexible system. We believe that’s not a trade-off you should have to make.

Proxy solutions can:

- Bog down your system with extra latency,

- Complicate your code,

- Increase your dependency on a third party, and

- Slow down your innovation if they don’t keep up with the rapid speed of GenAI advancements.

Autoblocks is designed to be proxyless, from the ground up. We put you in the driver's seat and let you build your products as you see fit. We don’t box you in — we sit in the passenger seat and only help you along your journey.

Read more here: The Dangers of Using LLMOps as a Proxy

All the data you need in one place. And the scale to handle it.

Building delightful GenAI products doesn’t happen in isolation. Rather than constraining the type of data you can send into Autoblocks, we enable users to send any relevant events that help you better understand your GenAI product experience, including:

- Application log data,

- User interactions,

- Database calls, and

- Retrieval data.

Organized into intuitive traces, this data provides a holistic view of user interactions. This is beneficial for countless reasons, including:

- Efficient Debugging: Say goodbye to constant tool switching.

- Precise Filtering: Home in on the data you need with pinpoint accuracy.

- Powerful Visualizations: Craft insightful charts and graphs.

- Quality Fine-Tuning Datasets: Curate exceptional datasets to train your AI models on.

- Comprehensive Replays: Compare and contrast across different event types.

Built for scale

Our infrastructure is robust and designed to handle volumes of data that dwarf other solutions. We're the trusted platform for industry leaders like Gamma, and we're ready to support your growth too.

Read more about our scalability in action: Scaling our Ingestion API from 0 to 1 Million Daily Events